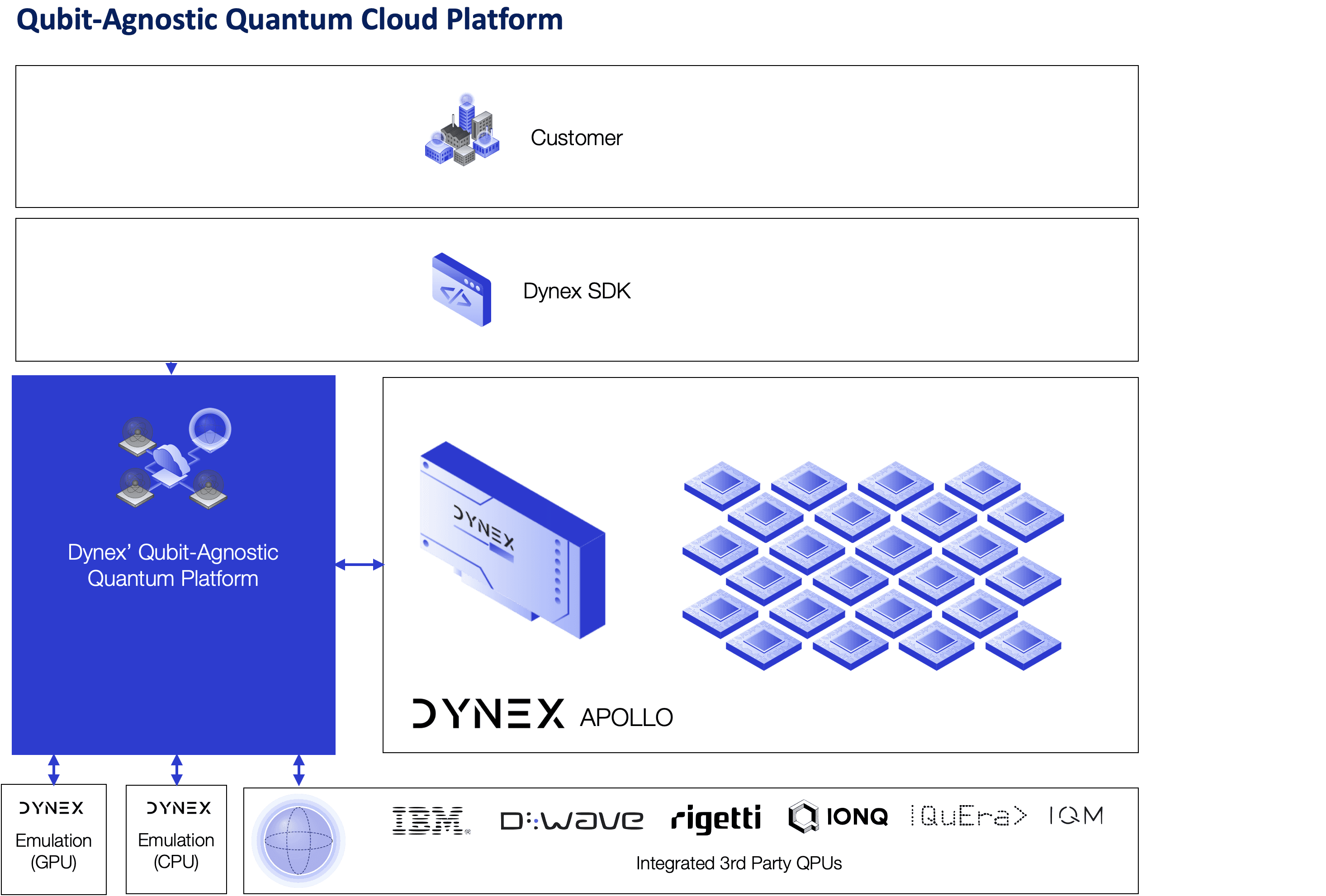

Dynex provides a qubit-agnostic computing platform designed to unify diverse quantum and quantum-driven compute resources under a single execution and programming environment. The platform enables users to access heterogeneous compute modalities through a consistent workflow for optimization, simulation, and probabilistic workloads—without requiring direct exposure to device-specific implementations.

The Dynex platform focuses on abstraction, orchestration, and interoperability, allowing end users to work at the problem level rather than the hardware level.

1. Platform Overview

1.1 Unified Execution Environment

Dynex presents all supported compute backends as standardized execution resources within a common runtime environment. These resources may include:

proprietary Dynex compute systems,

large-scale software-based emulation resources, and

third-party quantum processing units operated by external providers.

A centralized orchestration layer manages workload submission, routing, execution coordination, and result handling. From the user’s perspective, workloads are expressed once and executed consistently, independent of the underlying compute modality.

1.2 Hybrid and Heterogeneous Workflows

The platform is designed to support hybrid workflows that may combine different computational paradigms within a single problem lifecycle. Depending on availability, suitability, or performance requirements, workloads may be mapped to different backend resources dynamically.

Backend selection may take into account factors such as problem structure, execution constraints, and system availability, while remaining transparent to the application developer.

2. Dynex Compute Systems

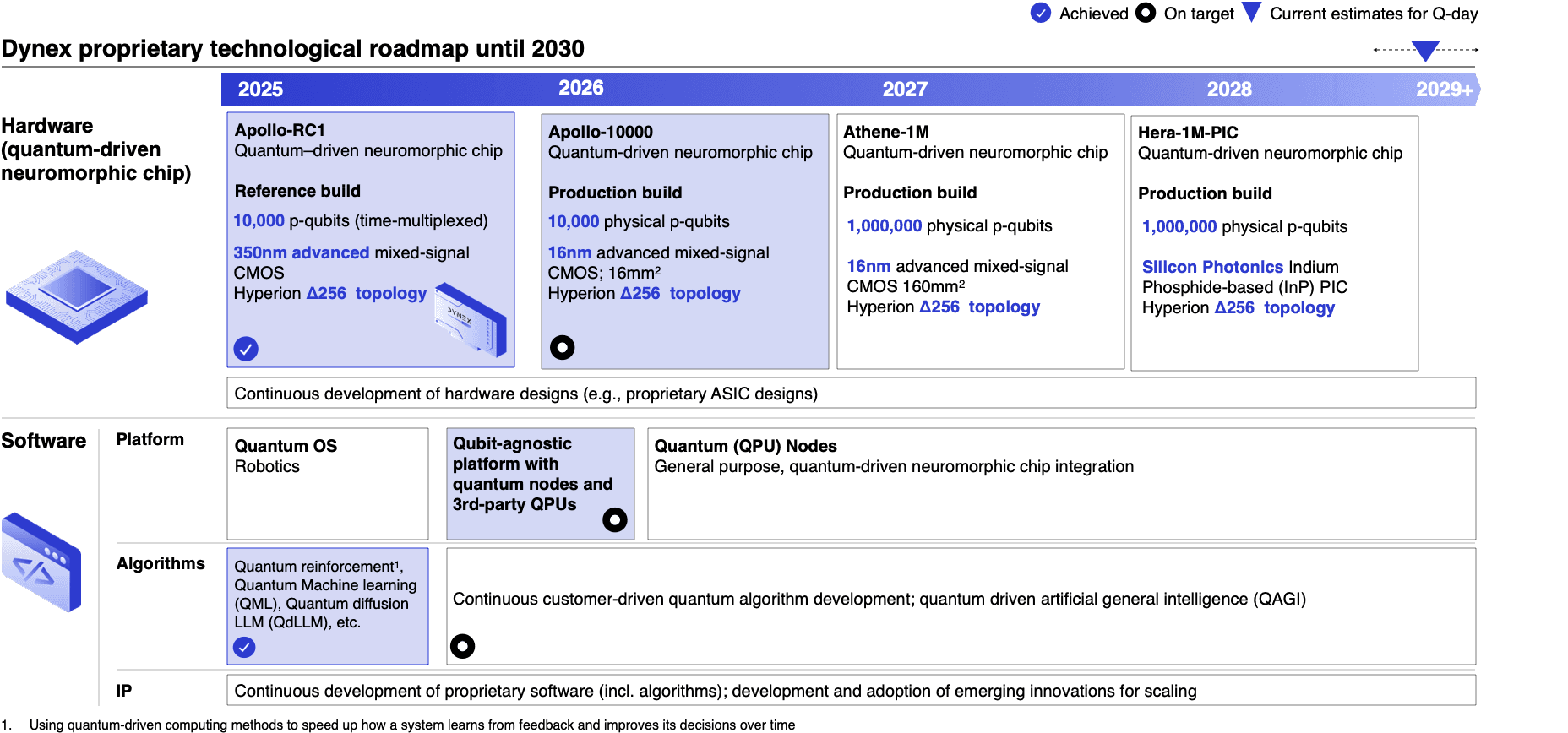

Certain Dynex-operated compute systems are optimized for probabilistic and energy-based problem formulations commonly used in optimization, sampling, and inference. These systems are integrated into the Dynex platform as native execution resources and are accessed through the same APIs and tooling as other supported backends.

From a platform perspective, these systems function as specialized accelerators for probabilistic computation, complementing both classical and quantum hardware resources.

> Watch the Apollo video

> Scientific Publications

3. Integration of External Quantum Hardware

Dynex supports interoperability with a range of external quantum computing providers across different hardware paradigms, including gate-based systems, annealing systems, and analog quantum simulators.

Provider | Device | Technology |

|---|---|---|

IBM | Eagle, others | Superconducting transmon (gate model) |

IonQ | Aria, Forte | Trapped-ion (gate model) |

Rigetti | Ankaa series | Superconducting (gate model) |

D-Wave | Advantage / Advantage2 | Quantum annealing |

QuEra | Aquila | Neutral-atom / Rydberg analog simulation |

IQM | Garnet, Emerald | Superconducting |

The platform abstracts differences in control interfaces, device topology, and execution semantics, enabling external quantum hardware to be used as complementary resources for experimentation, benchmarking, validation, or hybrid workflows.

4. Algorithmic Emulation Resources

In addition to physical hardware, Dynex provides access to high-performance software-based emulation resources designed to support large problem instances, development workflows, and reproducible experimentation.

These resources integrate seamlessly with the same SDK and runtime environment, allowing users to develop, test, and validate workloads before or alongside execution on specialized hardware.

up to 1 million algorithmic qubits,

deterministic reproducibility,

flexible embedding,

and compatibility with the same SDK used for Apollo and QPUs.

GPU qNodes are particularly suited for:

large-scale sweeps,

embedding validation,

debugging of Hamiltonian structures,

and scenarios where massive problem sizes exceed practical physical qubit counts.

5. SDK and Programming Model

The Dynex SDK provides a unified programming interface for expressing optimization, probabilistic, and quantum-inspired workloads. Users interact with the platform through high-level representations such as:

optimization problem formulations,

probabilistic models,

graph-based structures, and

circuit-derived abstractions.

The SDK handles compilation, transformation, and backend adaptation internally, enabling portability across supported execution resources.

> Dynex SDK

> Dynex SDK Documentation

6. Runtime and Deployment Model

6.1 Managed Execution

The Dynex runtime environment is designed for flexible, managed execution. Compute resources may be provisioned dynamically, workloads may be rerouted as needed, and results may be returned incrementally or upon completion, depending on workload characteristics.

6.2 Distributed and Federated Operation

Compute resources supported by Dynex may operate in Dynex-managed environments, partner facilities, or distributed deployments. The platform coordinates execution across these environments while maintaining a unified user experience.

7. Application Areas

The Dynex platform supports a wide range of application domains, including but not limited to:

Optimization

combinatorial optimization

scheduling and routing

portfolio and risk modeling

constraint satisfaction problems

Sampling and Inference

probabilistic sampling

Bayesian inference

statistical and stochastic modeling

Hybrid Quantum Workflows

circuit-inspired problem representations

benchmarking and verification across hardware types

exploratory hybrid execution strategies

Machine Learning Acceleration

energy-efficient inference

probabilistic modeling

hybrid analog-digital workflows

> Industries

> Examples

> Use-Cases

8. Technical Documentation

Detailed technical specifications and hardware-level documentation for Dynex-operated compute systems are provided separately under controlled disclosure.

Note: Technical descriptions are intentionally non-exhaustive and do not disclose implementation details.