Next Generation Algorithms for Machine Learning

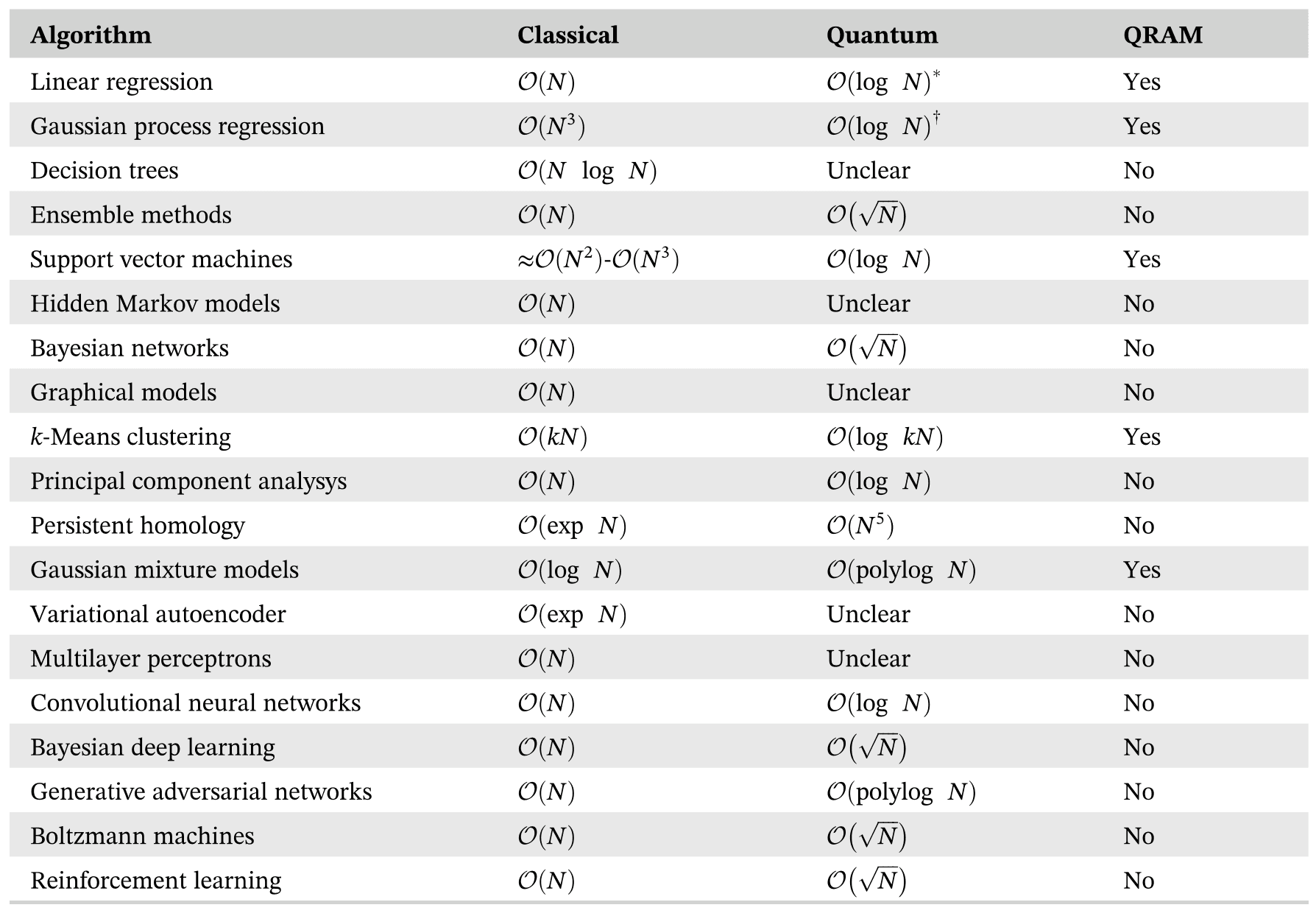

Quantum computing algorithms for machine learning harness the power of quantum mechanics to enhance various aspects of machine learning tasks. As both, quantum computing and neuromorphic computing are sharing similar features, these algorithms can also be computed efficiently using Dynex technology – but without the limitations of limited qubits, error correction or availability. The following table provides an overview of the main quantum machine learning algorithms reported in literature:

Quantum Support Vector Machine (QSVM): QSVM is a quantum-inspired algorithm that aims to classify data using a quantum kernel function. It leverages the concept of quantum superposition and quantum feature mapping to potentially provide computational advantages over classical SVM algorithms in certain scenarios.

Quantum Principal Component Analysis (QPCA): QPCA is a quantum version of the classical Principal Component Analysis (PCA) algorithm. It utilizes quantum linear algebra techniques to extract the principal components from high-dimensional data, potentially enabling more efficient dimensionality reduction in quantum machine learning.

Quantum Neural Networks (QNN): QNNs are quantum counterparts of classical neural networks. They leverage quantum principles, such as quantum superposition and entanglement, to process and manipulate data. QNNs hold the potential to learn complex patterns and perform tasks like classification and regression, benefiting from quantum parallelism.

Quantum K-Means Clustering: Quantum K-means is a quantum-inspired variant of the classical K-means clustering algorithm. It uses quantum algorithms to accelerate the clustering process by exploring multiple solutions simultaneously. Quantum K-means has the potential to speed up clustering tasks for large-scale datasets.

Quantum Boltzmann Machines (QBMs): QBMs are quantum analogues of classical Boltzmann Machines, which are generative models used for unsupervised learning. QBMs employ quantum annealing to sample from a probability distribution and learn patterns and structures in the data.

Quantum Support Vector Regression (QSVR): QSVR extends the concept of QSVM to regression tasks. It uses quantum computing techniques to perform regression analysis, potentially offering advantages in terms of efficiency and accuracy over classical regression algorithms.

Examples

Quantum-Enhanced Generative AI: Dynex’s Breakthrough with qdLLM

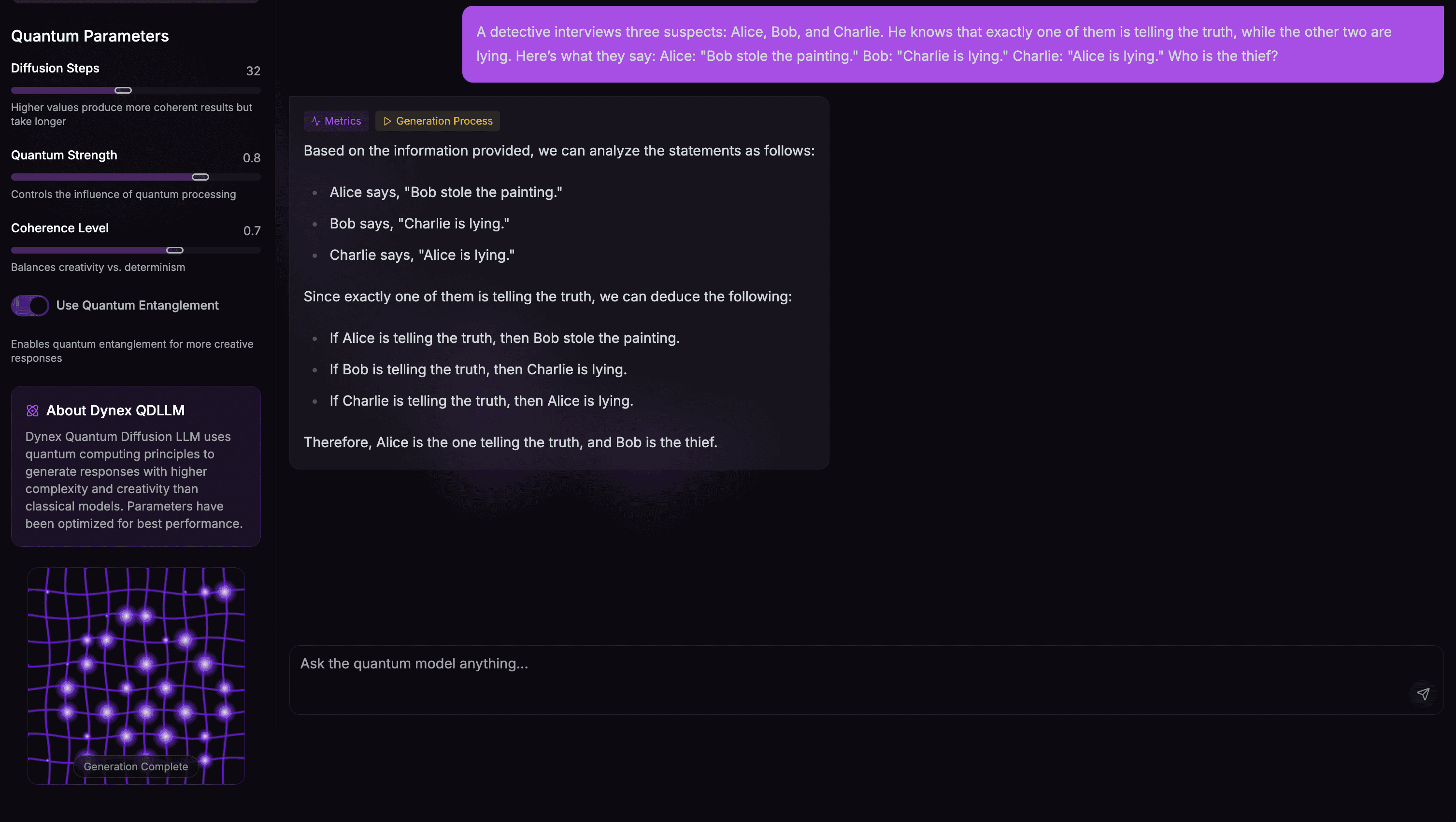

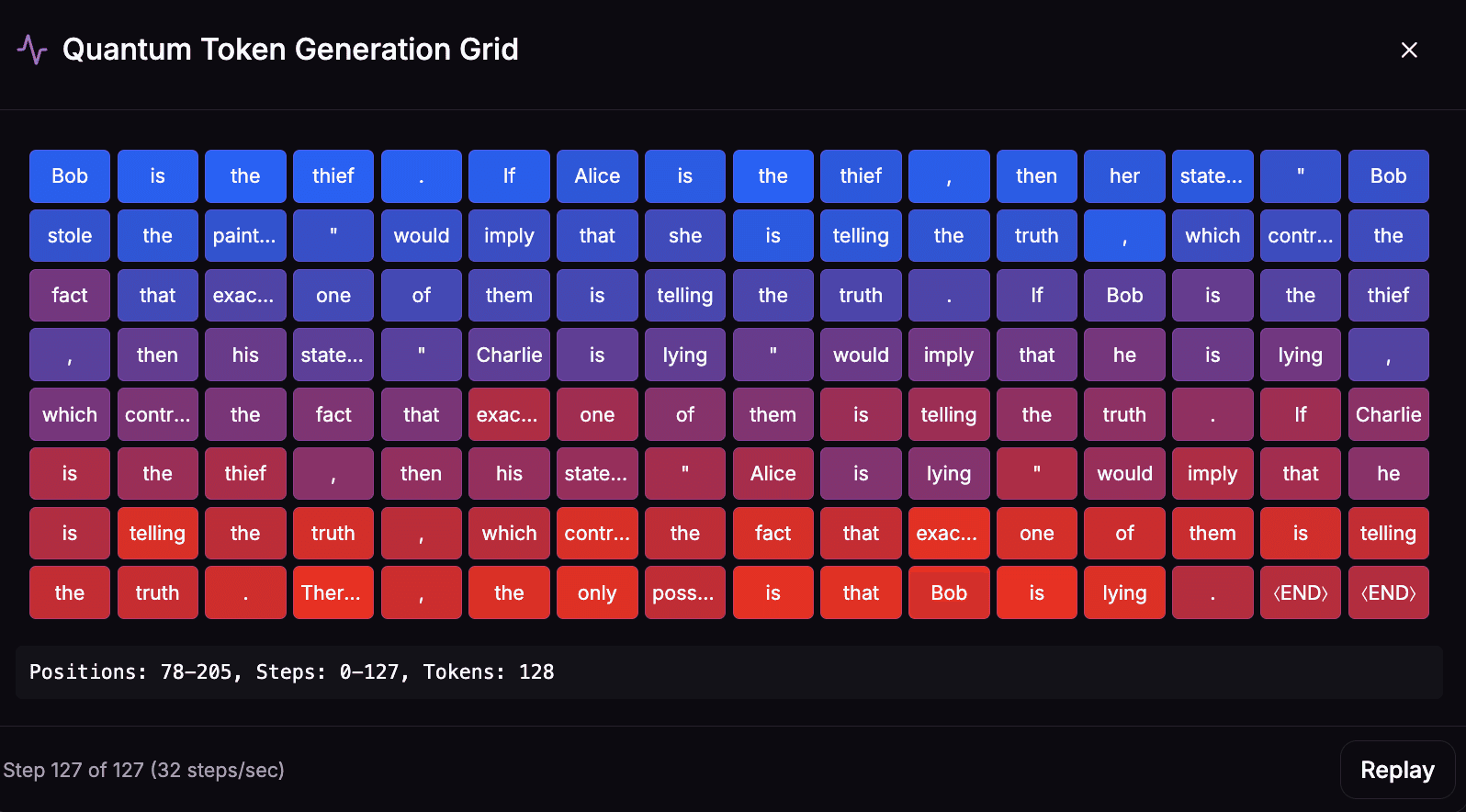

Recent advancements in natural language processing have shifted from traditional autoregressive models (ARMs) to diffusion-based approaches. A key innovation in this field is Large Language Diffusion Models (LLaDA), which utilize a forward data masking process and a reverse process, parameterized by a vanilla Transformer, to predict masked tokens. Building upon this foundation, Dynex has integrated a quantum diffusion layer, resulting in the Quantum-Diffusion-LLM (qdLLM)—a breakthrough in quantum-enhanced generative AI.

Technical Overview

Quantum-Diffusion-LLM combines diffusion models with quantum-inspired optimization to generate high-quality text. Unlike traditional autoregressive language models, our system employs a masked diffusion approach, predicting multiple tokens simultaneously through iterative denoising. The model corrupts the input by replacing tokens with mask tokens, then recovers the original sequence through a controlled reverse diffusion process. The quantum advantage comes from selecting tokens to unmask at each diffusion step. Our quantum-enhanced approach formulates this as a Quadratic Unconstrained Binary Optimization (QUBO) problem. Solved efficiently by Dynex, this identifies optimal token combinations. The system architecture has three main components: a core LLM for token prediction, a quantum module for token selection, and a hybrid orchestration layer that manages the diffusion process. During initial diffusion steps, quantum annealing establishes critical response elements. As confidence increases in later steps, the system switches to classical selection methods to refine details. This hybrid quantum-classical approach delivers responses with improved coherence, logical consistency, and factual accuracy compared to purely classical diffusion models, while maintaining reasonable inference times.

Superior Reversal Reasoning

One of the standout features of qdLLM is its advanced "reversal reasoning" capabilities. Traditional ARMs struggle with bidirectional understanding, as they generate text sequentially. qdLLM, leveraging quantum diffusion, excels in reasoning both forward and backward, producing more coherent and contextually accurate outputs. This capability is particularly advantageous in applications requiring deep logical inference and complex pattern recognition.

Parallelism and Scalability

By incorporating quantum diffusion, qdLLM achieves unprecedented parallelism and scalability. Unlike sequential ARMs, which are limited by step-by-step token generation, qdLLM enables simultaneous processing of multiple data points. This dramatically accelerates computation and allows for efficient scaling, making it well-suited for large-scale AI tasks.

Dynex's Quantum-Diffusion-LLM marks a paradigm shift in generative AI, combining quantum computing principles with cutting-edge language modelling to unlock new levels of reasoning, efficiency, and scalability.

> Medium Article: Introducing Dynex Quantum-Diffusion LLM (qdLLM): A Quantum-Enhanced Language Model

Quantum Natural Language Processing (QNLP)

Harnessing the power of quantum computing, quantum natural language processing algorithms offers unparalleled advantages in language processing tasks. Quantum algorithms excel in processing vast amounts of data simultaneously, enabling faster and more efficient language analysis. By leveraging quantum superposition and entanglement, our algorithm can explore multiple linguistic features in parallel, leading to more accurate and nuanced language understanding. Additionally, quantum computing's ability to handle complex linguistic structures with higher dimensionality allows for the extraction of deeper semantic meanings from text data. With these advancements, our quantum NLP algorithm promises to transform language processing, paving the way for more sophisticated text analysis and comprehension.

> Youtube "Dynex Quantum-NLP (Q-NLP) Use Case Example"

Showcasing our Q-NLP trained special purpose chatbot for Canadian building codes 2019 vs. 2023! This advanced model assists with queries about specific codes and changes, delivering fast and precise responses. Training time? Just 292.19 seconds.

> Youtube "Introducing Quantum Natural Language Processing on Dynex"

Video showcasing an end-end process of collecting data from websites, training the QNLP model on Dynex and communicating with the resulting ChatGPT style bot (in realtime)

Quantum Self-Attention Transformer

The quantum self-attention transformer circuit is designed to process word embeddings derived from sentences and generate new sentences based on quantum operations. The circuit begins by embedding the binary representation of input vectors into quantum states, followed by multiple layers of rotation and controlled gates to capture complex relationships between the inputs. After applying the Quantum Fourier Transform (QFT) and Grover's operator, the circuit uses a combination of Hadamard, T, and rotation gates to further process the information. The final output is a set of expectation values, which are processed with softmax to generate attention-weighted outputs. These outputs are then used to generate a new sentence by combining the embeddings with word vectors similar to the quantum-generated outputs, ensuring a coherent and contextually relevant sentence. This circuit essentially uses quantum computation to perform the role of attention in a transformer model, which is crucial for tasks like natural language processing, where understanding the importance of different words in a sentence is key to generating meaningful text. The quantum approach aims to leverage the potential speedups and parallelism inherent in quantum computing to perform these tasks more efficiently.

Quantum Transformer Algorithm on Dynex (QTransform)

Transformers are a type of deep learning model that have transformed the field of artificial intelligence, particularly in natural language processing tasks. They excel at handling sequential data and understanding context over long sequences, enabling advancements in machine translation, text generation, and more. The discovery of a quantum transformer algorithm by Dynex represents a significant leap forward, combining the power of transformers with the unparalleled computational capabilities of quantum computing. This hybrid approach promises even faster processing speeds and enhanced performance, making it possible to tackle complex AI problems more efficiently than ever before. By leveraging quantum principles, such as superposition and entanglement, our quantum transformer algorithm can process vast amounts of data simultaneously, leading to more accurate and nuanced AI models, thereby pushing the boundaries of what AI can achieve.

> Youtube: "How-to: Quantum Transformer Algorithm on Dynex"

Mode-Assisted Quantum-RBM

The integration of neuromorphic computing into the Dynex technology signifies a transformative step in computational technology, particularly in the realms of machine learning and optimization. Dynex's advanced quantum computing leverages the unique attributes of neuromorphic dynamics, utilising neuromorphic annealing - a technique divergent from conventional computing methods - to adeptly address intricate problems in discrete optimization, sampling, and machine learning.

> Example: Mode-Assisted Unsupervised Quantum-RBM (PyTorch) on Dynex

> Example: Mode-Assisted Unsupervised Quantum-RBM (TensorFlow) on Dynex

Scientific background:

Advancements in Unsupervised Learning: Mode-Assisted Quantum Restricted Boltzmann Machines Leveraging Neuromorphic Computing on the Dynex Platform; Adam Neumann, Dynex Developers; International Journal of Bioinformatics & Intelligent Computing. 2024; Volume 3(1):91- 103, ISSN 2816-8089; Quantum Frontiers on Dynex: Elevating Deep Restricted Boltzmann Machines with Quantum Mode-Assisted Training; Adam Neumann, Dynex Developers; 116660843, Academia.edu; 2024; Manukian, Haik, et al. "Mode-assisted unsupervised learning of restricted Boltzmann machines." Communications Physics 3.1 (2020): 105.; Dixit V, Selvarajan R, Alam MA, Humble TS and Kais S (2021) Training Restricted Boltzmann Machines With a D-Wave Quantum Annealer. Front. Phys. 9:589626. doi: 10.3389/fphy.2021.589626

Quantum Boltzmann-Machine

QBMs are quantum analogues of classical Boltzmann Machines, which are generative models used for unsupervised learning. QBMs employ quantum annealing to sample from a probability distribution and learn patterns and structures in the data.

> Example: Quantum-Boltzmann-Machine (PyTorch) on Dynex

> Example: Quantum-Boltzmann-Machine Implementation (3-step QUBO) on Dynex

> Example: Quantum-Boltzmann-Machine (Collaborative Filtering) on Dynex

> Example: Quantum-Boltzmann-Machine Implementation on Dynex

Scientific background:

Dixit V, Selvarajan R, Alam MA, Humble TS and Kais S (2021) Training Restricted Boltzmann Machines With a D-Wave Quantum Annealer. Front. Phys. 9:589626. doi: 10.3389/fphy.2021.589626; Sleeman, Jennifer, John E. Dorband and Milton Halem. “A Hybrid Quantum enabled RBM Advantage: Convolutional Autoencoders For Quantum Image Compression and Generative Learning.” Defense + Commercial Sensing (2020)

Quantum Support-Vector-Machine

QSVM is a quantum-inspired algorithm that aims to classify data using a quantum kernel function. It leverages the concept of quantum superposition and quantum feature mapping to potentially provide computational advantages over classical SVM algorithms in certain scenarios.

> Example: Quantum-Support-Vector-Machine Implementation on Dynex

> Example: Quantum-Support-Vector-Machine (PyTorch) on Dynex

> Example: Quantum-Support-Vector-Machine (TensorFlow) on Dynex

Scientific background:

Rounds, Max and Phil Goddard. “Optimal feature selection in credit scoring and classification using a quantum annealer.” (2017)

Quantum Feature Selection by Mutual Information

Quantum feature selection by mutual information is a process that uses quantum computing techniques to identify the most relevant features from a dataset, enhancing the efficiency and accuracy of machine learning models.

> Example: Feature Selection - Titanic Survivals

Scientific background: Xuan Vinh Nguyen, Jeffrey Chan, Simone Romano, and James Bailey. 2014. Effective global approaches for mutual information based feature selection. In Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining (KDD ‘14). Association for Computing Machinery, New York, NY, USA, 512–521

Quantum Feature Reduction

Quantum feature reduction is a process that uses quantum computing techniques to identify the most relevant features from a dataset, enhancing the efficiency and accuracy of machine learning models.

> Example: Breast Cancer Prediction using the Dynex scikit-learn Plugin

Scientific background:

Bhatia, H.S., Phillipson, F. (2021). Performance Analysis of Support Vector Machine Implementations on the D-Wave Quantum Annealer. In: Paszynski, M., Kranzlmüller, D., Krzhizhanovskaya, V.V., Dongarra, J.J., Sloot, P.M.A. (eds) Computational Science – ICCS 2021. ICCS 2021. Lecture Notes in Computer Science(), vol 12747. Springer, Cham

Dynex quantum Computing Outperform Traditional Methods

A new approach using simulated quantum annealing (SQA) to numerically simulate quantum sampling in a deep Boltzmann machine (DBM) was presented in [1]. The authors proposed a framework for training the network as a quantum Boltzmann machine (QBM) in the presence of a significant transverse field for reinforcement learning. However, they demonstrated that the process of embedding Boltzmann machines in larger quantum annealer architectures is problematic when huge weights and biases are needed to emulate the Boltzmann machine’s logical nodes using chains and clusters of physical qubits. On the other hand, quantum annealing has the potential to speed up the sampling process exponentially.

Dynex's quantum-as-a-service (QaaS) technology does not have these physical limitations and can therefore overcome such scaling problems and expand to large, real-world datasets and problems.

Mode-Assisted Quantum Restricted Boltzmann Machines

The integration of neuromorphic computing into the Dynex technology signifies a transformative step in computational technology, particularly in the realms of machine learning and optimization. Dynex's advanced technology leverages the unique attributes of neuromorphic dynamics, utilising neuromorphic annealing - a technique divergent from conventional computing methods - to adeptly address intricate problems in discrete optimization, sampling, and machine learning.

> Github: Dynex MA-QRBM Package

Scientific background: Advancements in Unsupervised Learning: Mode-Assisted Quantum Restricted Boltzmann Machines Leveraging Neuromorphic Computing on the Dynex Platform; Adam Neumann, Dynex Developers; International Journal of Bioinformatics & Intelligent Computing. 2024; Volume 3(1):91- 103, ISSN 2816-8089

Efficient Quantum State Tomography on Dynex

Quantum state tomography is a process used in quantum physics to characterize and reconstruct the quantum state of a system. In simple terms, it's like taking a snapshot of a quantum system to understand its properties fully. In quantum mechanics, a quantum state represents the complete description of a quantum system, including its position, momentum, energy, and other physical quantities. However, unlike classical systems where properties are well-defined, quantum systems often exist in superposition states, meaning they can simultaneously be in multiple states until measured. While traditional training methods perform rather poorly, Dynex computed training achieves near perfect fidelity.

> Github: Quantum Mode-assisted unsupervised learning of Restricted Boltzmann Machines

Scientific background: Quantum Frontiers on Dynex: Elevating Deep Restricted Boltzmann Machines with Quantum Mode-Assisted Training; Adam Neumann, Dynex Developers; 116660843, Academia.edu; 2024

Quantum-Boltzmann-Machine (QBM)

This example demonstrates a Quantum-Boltzmann-Machine (QBM) implementation using the Dynex technology to perform the computations and compare it with a traditional Restricted-Boltzmann-Machine (RBM). RBM is a well-known probabilistic unsupervised learning model which is learned by an algorithm called Contrastive Divergence. An important step of this algorithm is called Gibbs sampling – a method that returns random samples from a given probability distribution. We decided to conduct our experiments on the popular MNIST dataset considered a standard benchmark in many of the machine learning and image recognition subfields. The implementation follows a highly optimised QUBO formulation.

Figure: The QBM evolves much faster to an attractive Mean Squared Error (MSE) than the traditional RBM, which means a significant lower amount of training iterations is required. In addition is the achieved MSE much lower, meaning the QBM created models have higher accuracy. This finding is in line with the results from the papers referenced. However, [1] demonstrated that the process of embedding Boltzmann machines in larger quantum annealer architectures is problematic when huge weights and biases are needed to emulate the Boltzmann machine’s logical nodes using chains and clusters of physical qubits because of the limited number of qubits available. Dynex's quantum-as-a-service (QaaS) technology provides a more scalable alternative and can used to train models with millions of variables. Especially when real-world models are to be trained, the number of training iterations and accuracy are important.

> Github: : Computing on the Dynex Neuromorphic Platform: Image Classification

Scientific background: Dixit V, Selvarajan R, Alam MA, Humble TS and Kais S (2021) Training Restricted Boltzmann Machines With a D-Wave Quantum Annealer. Front. Phys. 9:589626. doi: 10.3389/fphy.2021.589626; Sleeman, Jennifer, John E. Dorband and Milton Halem. “A Hybrid Quantum enabled RBM Advantage: Convolutional Autoencoders For Quantum Image Compression and Generative Learning.” Defense + Commercial Sensing (2020).

Quantum-Support-Vector-Machine (QSVM)

In another example, we ran simulations for the Standard Banknote Authentication dataset and measured the following Key Performing Indicators (KPIs) using a Quantum Support Vector Machine (QSVM):

Accuracy: the fraction of samples that have been classified correctly

Precision: proportion of correct positive identifications over all positive identifications

Recall: proportion of correct positive identifications over all actual positives

F1 score: harmonic mean of the model’s precision and recall

Here are the results:

Figure: Quantum (D-Wave) and Neuromorphic (Dynex) based SVM model training is superior to traditional support vector machines. We used Scikit-learn’s LIBSVM using Sequential Minimal Optimisation as benchmark

> Github: Quantum Support Vector Machine with the Dynex PyTorch Plugin

Scientific background: Rounds, Max and Phil Goddard. “Optimal feature selection in credit scoring and classification using a quantum annealer.” (2017).